The start of a new year—and a new decade—is always a good time to stop and gain one’s bearings. Whether I look forward or backward over a ten-year span, what I see most are changes. Specifically, I see technology rapidly altering the way we live.

Ten years ago, did you ever utter the words “Hey, Alexa”? Did you even consider changing your home thermostat from the office, or monitoring your fitness from your watch? Did you think of a tablet as anything but a pad of paper or an aspirin? And just how often did the terms “uber” or “swipe left” even come up in conversation?

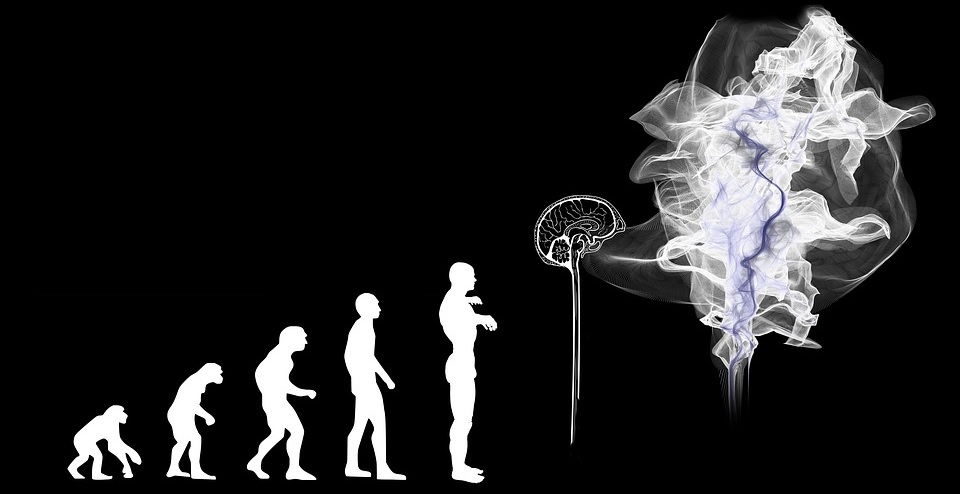

That and more has all changed within the last decade, and this bullet train shows no signs of slowing down. Space tourism, electric cars, human augmentation, and more are positioned to enter the mainstream in the next few years. Even things like human gene editing, quantum computing, and asteroid mining—ideas that were pure science fiction just a few years ago—may soon become practical realities.

In financial technology, I believe developments like blockchain and biometric identification will make a huge impact on combatting fraud at the merchant level. I’d love to say it could mark the end of fraud, but I’m not quite that optimistic!

Progress: Good or Bad?

The next decade will throw a lot at us. Of course, that raises concerns about privacy, security, and morality. Are we ready for the world we’re creating?

I’m certainly not the first person to ask whether we’re prepared—philosophically or psychologically—to deal with new potentialities. To paraphrase Jeff Goldblum in Jurassic Park, perhaps we have become so preoccupied with what we could do, we’re not stopping to wonder if maybe there are things we shouldn’t.

Still, trying to pigeonhole any particular technology as “good” or “bad” is largely pointless. In most cases, it’s not the tech, but the application.

Generally speaking, technology is harmless without a human being wielding it. However, you could also flip that: if the technology didn’t exist, it couldn’t be used against anyone. Toss those two perspectives around long enough, and you’ll eventually end up with an unsolvable, chicken-or-the-egg argument. In my opinion, that is the problem we face today: not the morality of technology, but the fact that we’re looking for answers that don’t exist.

Maybe We Need Better Questions

With so many new technologies bombarding us, it’s a bit naïve to think that society will stop long enough to consider each one and ask if we should or shouldn’t adapt it. Even if they did, it wouldn’t solve the problem, since we already have a backlog of new tech we’re already in the process of implementing. The future is already here, it touches all our lives, and we are NOT going to get that genie back in the bottle.

Instead of trying to polarize opinions into a black-and-white dichotomy, perhaps we need to acknowledge that, for better or worse, we are responsible for our technology. We can take advantage of new discoveries, but we must live with the results. With automation, for example, we’ve reached a point where computers and robots can perform many tasks faster and more accurately than humans. AI has evolved to where automated systems can even maintain themselves, to some degree.

That sounds positive, but it’s not the full picture. As automation becomes increasingly common over the next decade, the need for human workers will shrink. In fact, some experts predict that 25% or more of currently held positions could be gone by 2030.

So on the one hand, we have greater efficiency, which generally leads to lower operating costs. On the other hand, we’re potentially automating a quarter of our workforce right out of a job. Yes, automation will create new positions, but as time passes, there will be a net reduction in our need for human labor. Asking whether that’s good or bad isn’t a very useful question; instead, we should examine how to react to that inevitability.

Looking at Both Sides

Technology is not going to stop evolving, and the results are always going to be a mixed bag. So perhaps our decisioning processes should take a wider approach than simply “either/or.”

I believe we must be willing to accept that every new development has the potential to be both good and bad. That understanding leads us to a more relevant form of questioning: what is the maximum amount of benefit we can achieve by implementing a given technology? What is the maximum potential for harm to businesses, workers, and clients? Can the end justify the means?

Obviously, that answer will vary based on business, industry, market, and even the technology itself. Each situation is different, so absolute moral judgements will simply not stick in most cases.

Certainly, it would be easier if there was a universal code that neatly categorized every new development in technology. But there isn’t and there never will be, and that’s ultimately for the best. Even as the world grows more standardized, there is still room—and a need—for individuality, and for personal and corporate responsibility.

Can a new technology be good or bad? The answer is “Yes.”

It will be both, and it will be neither, and arguing for an absolute label takes our focus off where it should be. Our job is to figure out which technologies work for us, our businesses, our customers, and ultimately, our society.

Changes—especially technological changes—are coming. Most of them can be put to a positive use, with little to no negative side effects. Those that won’t be of benefit…well, remember that you don’t have to use a technology, just because it’s there.